Classification is one of the most instinctive behaviors that humans exhibit. It is also a foundation for all cultural and scientific advancements, be it the taxonomy trees of evolution, Freudian classifications of anxieties and disorders or the elements in periodic tables. The moment we interact with an entity or an experience through any of our senses our mind immediately starts classifying it as good or bad, pleasurable or painful, or in multi-class buckets. Even now your mind is classifying this article into ‘is it helpful’ or ‘waste of my time’ 🙂

Classification & Technology

Today, technology is playing a key and transformational role in classifying content on a broad range of topics in social media, blogs, and news sites; each of these often seem to suffer from information overload. There is a need for a process that connects and/or classifies all the information into simpler foundational themes. The ability to classify content can create tremendous value, something that is hard to quantify as a product worth so many dollars and cents. Facebook demonstrates that there is inherent value in finding themes and relationships in people’s opinions, behaviors, and public content, as well as providing targeted marketing; yet it is free to the vast majority of its users, and arguably must be so in order to provide the community it does.

There are two ways one could classify information:

Binary classification: Up vote vs. down vote, manifested in instances as varied as sentiment analysis and fraud detection

Multi-class classification: Examples include the animal kingdom, email classification, and topic modeling

Ability to Learn

Humans also exhibit the ability to learn from mistakes, and the grit to be successful against all odds. In investing, let’s take ESG as an example. The investment beliefs here started with negative screening. For example, energy companies focusing on coal and oil always carried negative scores. With new datasets, we have adapted ways of classifying investments by also integrating forward looking views. If the same energy firm is now also investing in renewable sources, and has positive governance and social implications, it also gets some positive score. How well can a machine classifier learn as more data is available, or from sub-optimal decisions, and continue to unlearn and re-learn?

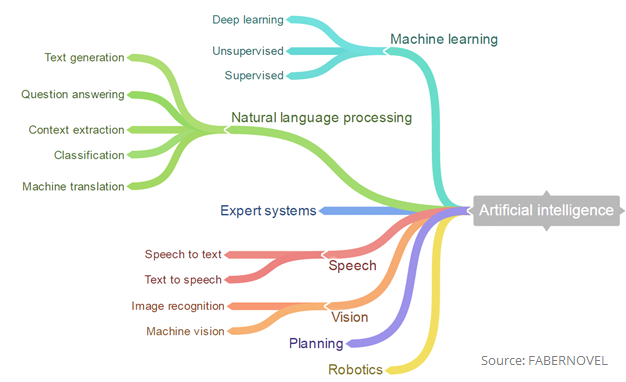

Machine Classification: From Keywords to Learning

The simplest form of classification in the machine world is keyword matches. But any such classification does not capture the context, the intent, or multi-dimensional characteristics.

A classifier is called ‘supervised’ if training samples are needed for its development and a function is learned to map inputs to outputs. The goal of such a classifier is to predict a categorical outcome from a vector of inputs.

Different from unsupervised classifiers, which group similar individuals into categories, supervised classifiers label their inputs. When a test variable arrives, most classifiers can help to predict the probability that the variable belongs to a particular category given its features.

Some of the most common scenarios where a supervised classifier is adopted are email classification (if you use Gmail, you are familiar with primary, social, and promotions).

Commercialization: Accuracy, Speed and Implementation

We’ll discuss one of the techniques we researched internally – XGBoost (Extreme Gradient Boosting), is a supervised learning system. The impact of XGBoost is recognized in different machine learning or data mining challenges, such as Kaggle, the platform for predictive modelling and analytics competitions. XGBoost is a popular algorithm that performs well on a wide range of problems, such as generic text classification, customer behavior prediction, malware classification, etc.

Literally speaking, XGBoost is not a new model, but one that follows the principle of regularized gradient tree boosting. According to the author of XGBoost, it “actually refers to the engineering goal to push the limit of computations resources for boosted tree algorithms.”.

The fundamental model of XGBoost, is based on a tree ensemble model. The sum of scores from each tree predicts the final result. Generally, in engineering terms, gradient boosting combines several weak learners into a strong learner and performs the optimization of a differentiable loss function iteratively.

XGBoost further improves the speed of computation by parallel processing, taking advantage of sparse aware implementation, and handling missing values automatically, as the tree structures have default directions.

Moreover, it is also designed for continuous training. All such settings allow XGBoost to outperform its peers by learning faster and more accurately, giving it commercial competitiveness

Classification at DiligenceVault

Due diligence is another world which is information heavy, where information is also changing spontaneously, and consequently classified and re-classified at multiple decision points. Asset managers receive various information requests from different investors, where similar information can be bucketed for downstream efficiency and analysis. Next, investors benefit from applying public and private inputs into a classifier in arriving at labels and flags and in identifying areas of further workflow and diligence.

Join us as we continue to integrate technologies for successful diligence decisions.